Humanoid and cognitive robotics

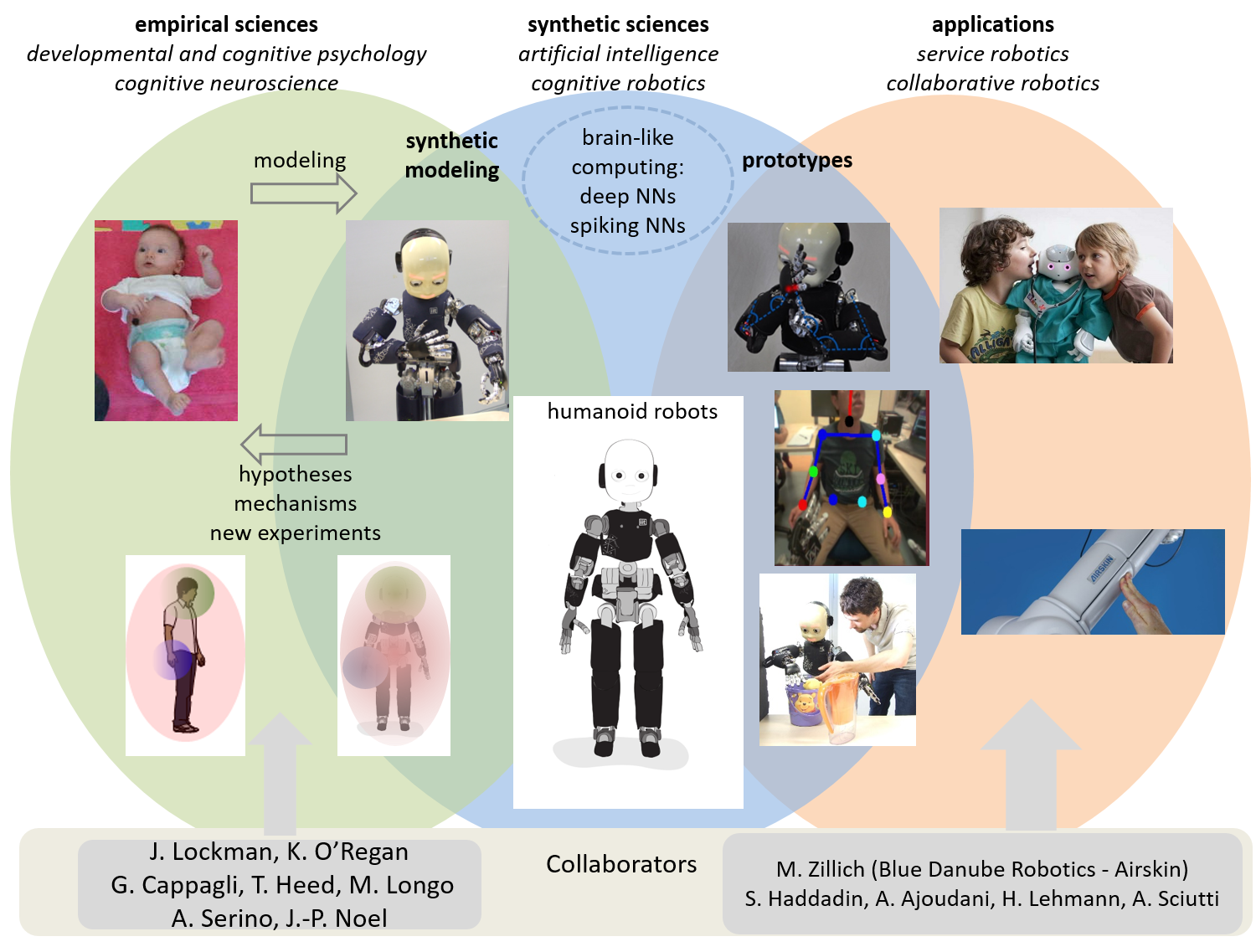

We’re a group researching in the areas of humanoid, cognitive developmental, neuro-, and collaborative robotics. Robots with artificial electronic skins are one of our specialties. The group is coordinated by Assoc. Prof. Matej Hoffmann.

In our research, we employ the so-called synthetic methodology, or “understanding by building”, with two main goals:

- Understanding cognition and its development. In particular, we’re interested in the “body in the brain“: how do babies learn to represent their bodies and the space around it (peripersonal space) and what are the mechanisms in the brain. We build embodied computational models on humanoid robots to uncover how these representations operate.

- Robots safe and natural around humans. Taking inspiration from humans, we make robots exploit multimodal information (mostly vision and touch) to share space with humans. We’re interested in physical and social human-robot interaction.

For more details about our Research see the corresponding tab.

Our full affiliation is Vision for Robotics and Autonomous Systems, Department of Cybernetics, Faculty of Electrical Engineering, Czech Technical University in Prague.

Humanoids group

- 2024-01-25 There is a fully funded postdoc position on Robots with whole-body tactile sensing – see HERE for details.

- 2023-06-09 Matej Hoffmann gave an invited talk at The 6th International Workshop on Intrinsically Motivated Open-ended Learning in Paris.

- 2023-06 Matej, Sergiu were lecturers and Jason and Filipe participants at at the Active Self Summer School, Tutzing, Germany.

- 2022-10-12 Matej Hoffmann delivered his habilitation lecture (yotube video) and became Associate Professor. Congratulations!

- 2022-9-20 We showed a strong presence at IEEE_ICDL!! Our lab was also nominated for the best paper award for our research on how infants learn about their bodies through self tactile exploration and how to track their movements without the use of motion capture.

- 2022-9-14 A new manuscript accepted to PLoS Computational Biology: Straka Z, Noel J-P, Hoffmann M (2022) A normative model of peripersonal space encoding as performing impact prediction [link].

- 2022-1-2 Two new PhD students starting: Jakub Rozlivek and Lukáš Rustler!

- 2021-12-14 Interview with Matej Hoffmann and our iCub robot featured in Fokus Václava Moravce on the human brain (our part starting at 1:06:23).

- 2021-11-30 CTU Prague Xmas Robot video. Featuring our Pepper and iCub (robots) and Petr and Lukáš (lab members).

- 2021-11-12 iCub and other robots from the Dept. featured in “Proč ne” magazine of Hospodářské noviny (in Czech). [pdf]

- 2021-11-9 Our iCub featured in the magazine ABC (issue 23, 2021) [pdf].

- 2021-10-28 Our robots with artificial skin on Czech TV Planeta YÓ.

- 2021-10-18 Our iCub humanoid has been presented in several media, including “Zprávičky” on the Czech TV, Czech Radio (Český Rozhlas – Radiožurnál), Novinky.cz, and more than 20 other media – see here for an overview.

- 2021-8-27 Our work on tactile exploration for body model learning through self-touch published in IEEE TCDS. The video was featured in the IEEE Spectrum Robotics Video Friday.

- 2021-8-26 We received Pepper humanoid robot!!!

- 2021-7-1 Two new postdocs joined our group: Sergiu Tcaci Popescu and Valentin Marcel. Welcome!

- 2021-6-15 We received the iCub humanoid robot!!! [youtube-video]

- 2020-11-27 Researchers’ night – Even robots can have skin! Interactive demo and interview with Matej Hoffmann, available on this youtube link [in Czech].

- 2020-9-4 Our work in social HRI has been presented at IEEE RO-MAN – a biomimetic hand (with Azumi Ueno) [pdf] and our Nao protecting its social space (with Hagen Lehmann) [pdf-arxiv] [slides with voice] [youtube-video].

- 2020-9-1 A new manuscript accepted to IEEE ICDL-Epirob and a new addition to the lab youtube channel: Gama, F.; Shcherban, M.; Rolf, M. & Hoffmann, M. (2020), Active exploration for body model learning through self-touch on a humanoid robot with artificial skin. [arxiv] [youtube-video]

- 2020-7-22 The Frontiers Research Topic Body Representations, Peripersonal Space, and the Self: Humans, Animals, Robots which Matej Hoffmann guest-edited is closed with 19 articles; the Editorial and e-book (download from RT page) are published.

- 2020-5-4 We are on twitter. You can follow us here https://twitter.com/humanoidsCTU.

- 2020-2-5 Matej Hoffmann and Zdenek Straka presented our work at the Human Brain Project summit.

- 2020-1-29 Interview with Matej Hoffmann in “Control Engineering Czech Republic” [in Czech].

- 2020-1-28 Matej Hoffmann and Petr Svarny invited speakers at “Robots 2020” – Trends in Automation…

- 2019-11-12 Matej Hoffmann was awarded the “GAČR EXPRO” project for excellence in fundamental research from the Czech Science Foundation.

- 2018-11-19 Z. Straka and J. Stepanovsky visited the HBP Summit – see the report about this and our work in the NRP Platform.

- 2018-10-29: We are partners in a new European research project (CHIST-ERA call) Interactive Perception-Action-Learning for Modelling Objects (IPALM) coordinated by Imperial College London (2019-2022).

- 2018-10-26: The Learning Body Models: Humans, Brains, and Robots seminar at Lorentz center has been a great success!

- 2018-10-19: Our new Nao robot with artificial skin is up and running! See the video and “autonomous touch video“.

- 2018-06-06: Article about our research and its participation as HBP Partnering Project for the public @ agenciasinc.es

- 2018-04-26: Matej Hoffmann was a member of the IIT Co-Aware team winning the KUKA Innovation Award 2018: Real-World Interaction Challenge. See the video.

- 2018-01 Our project has become a Partnering Project of the Human Brain Project.

- 2018 – Matej Hoffmann is the lead Topic Editor for this Frontiers Research Topic: Open for submissions!

- 2018-01: Work in collaboration with IIT Genoa and Yale University on “Compact real-time avoidance on a humanoid robot for human-robot interaction” has been accepted to the HRI ’18 Conference held in Chicago. [pdf @ arxiv] [youtube video]

- 2017-11-09: Matej Hoffmann will give a talk to the public at the Science and Technology week on 10.11.2017. (talk online – in Czech)

- 2017-09-14: Zdenek Straka and Matej Hoffmann were awarded ENNS Best Paper Award at 26th International Conference on Artificial Neural Networks (ICANN17) for their paper Learning a Peripersonal Space Representation as a Visual-Tactile Prediction Task.

- 2017-07-20: Our robot homunculus featured in the Italian magazine Focus and iCub YouTube channel (extended version in our channel). The article is available here: [early access – IEEE Xplore][postprint-pdf].

- 2017-04-18: A 4-page cover story about humanoid robotics in Respekt 16/2017 (a major Czech weekly journal; larger section available for free at ihned.cz.). Matej Hoffmann and Zdenek Straka interviewed and photographed with robots. A video, plus coverage of the rescue robots in the online addition to the story.

|

| Matej Hoffmann (Associate Professor) Google Scholar profile |

| . |

|

|

| Valentin Marcel Google Scholar profile |

Sergiu T. Popescu Google Scholar profile |

| . | ||

|

|

|

| Zdenek Straka Google Scholar profile |

Filipe Gama | Shubhan Patni |

| . | ||

|

|

|

| Jason Khoury | Jakub Rozlivek Google Scholar profile |

Lukas Rustler Google Scholar profile |

| . | ||

|

||

| Tomas Chaloupecky | ||

| . |

|

| Bedrich Himmel |

| . |

Alumni

|

|

| Karla Stepanova (Postdoc) Google Scholar profile |

Petr Svarny (PhD student – graduated 2023) Google Scholar profile |

| . |

More information can also be found here.

Models of body representations

How do babies learn about their bodies? Newborns probably do not have a holistic perception of their body; instead they are starting to pick up correlations in the streams of individual sensory modalities (in particular visual, tactile, proprioceptive). The structure in these streams allows them to learn the first models of their bodies. The mechanisms behind these processes are largely unclear. In collaboration with developmental and cognitive psychologists, we want to shed more light on this topic by developing robotic models. See HERE for more details.

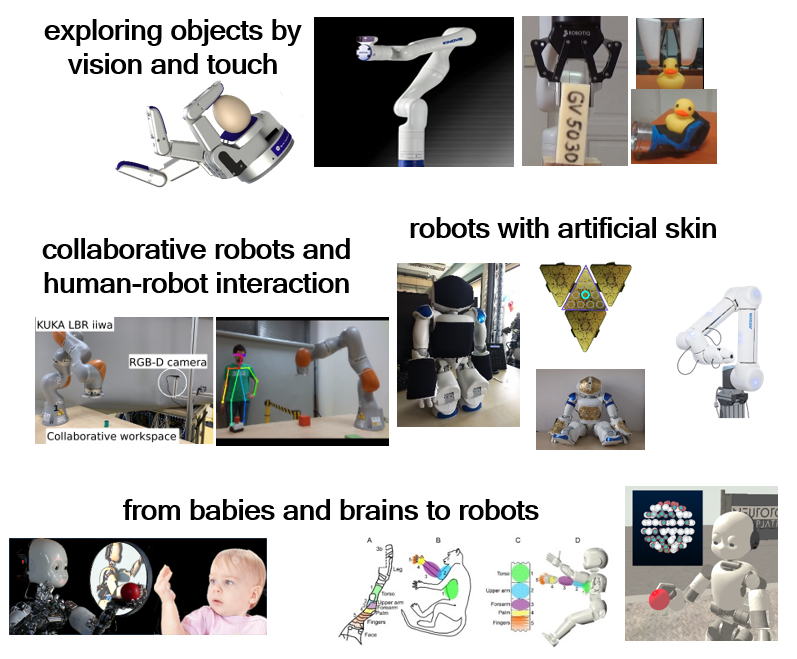

Collaborative robots and artificial touch

We target two application areas where robots with sensitive skins and multimodal awareness provide a key enabling technology:

Robots are leaving the factory, entering domains that are far less structured and starting to share living spaces with humans. As a consequence, they need to dynamically adapt to unpredictable interactions with people and guarantee safety at every moment. “Body awareness” acquired through artificial skin can be used not only to improve reactions to collisions, but when coupled with vision, it can be extended to a surface around the body (so-called peripersonal space), facilitating collision avoidance and contact anticipation, eventually leading to safer and more natural interaction of the robot with objects, including humans.

Standard robot calibration procedures require prior knowledge of a number of quantities from the robot’s environment. These conditions have to be present for recalibration to be performed. This has motivated alternative solutions to the self-calibration problem that are more “self-contained” and can be performed automatically by the robot. These typically rely on self-observation of specific points on the robot using the robot’s own camera(s). The advent of robotic skin technologies opens up the possibility of completely new approaches. In particular, the kinematic chain can be closed and the necessary redundant information obtained through self-touch, broadening the sample collection from end-effector to whole body surface. Furthermore, the possibility of truly multimodal calibration – using visual, proprioceptive, tactile, and inertial information – is open.

- Whole-body awareness for safe and natural interaction: from brains to collaborative robots. “GAČR EXPRO” project for excellence in fundamental research from the Czech Science Foundation (2020-2024)

- Interactive Perception-Action-Learning for Modelling Objects (IPALM). European project (Horizon 2020, FET, ERA-NET Cofund, CHIST-ERA) coordinated by Imperial College London (2019-2022).

- Robot self-calibration and safe physical human-robot interaction inspired by body representations in primate brains. Czech Science Foundation. (2017-2019)

- We are also a Partnering Project of the Human Brain Project.

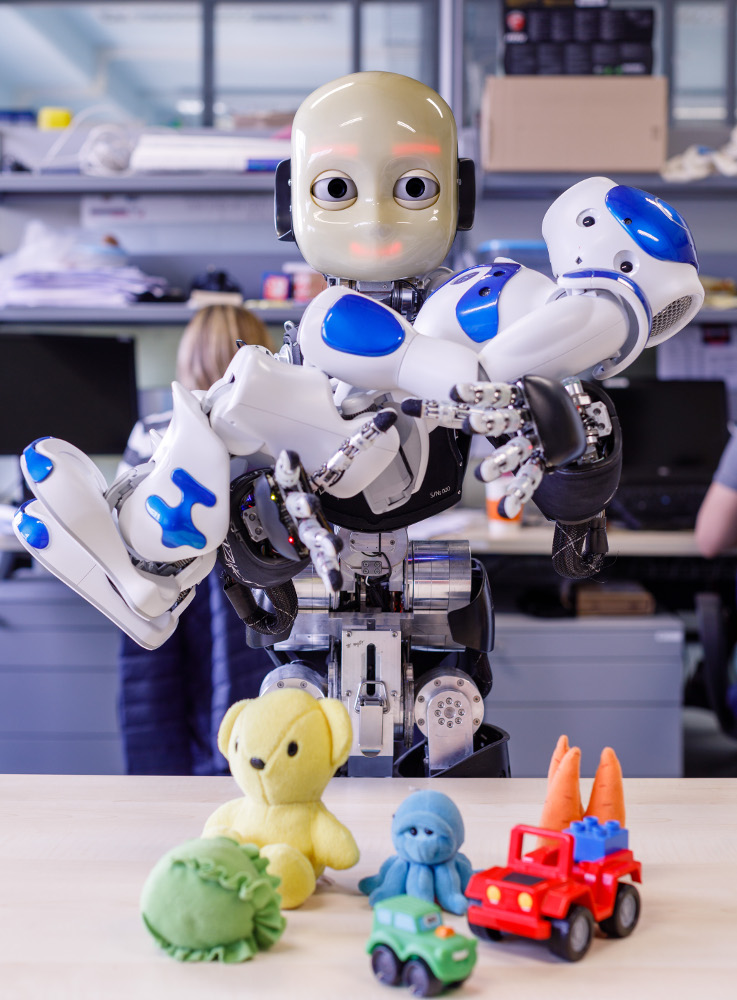

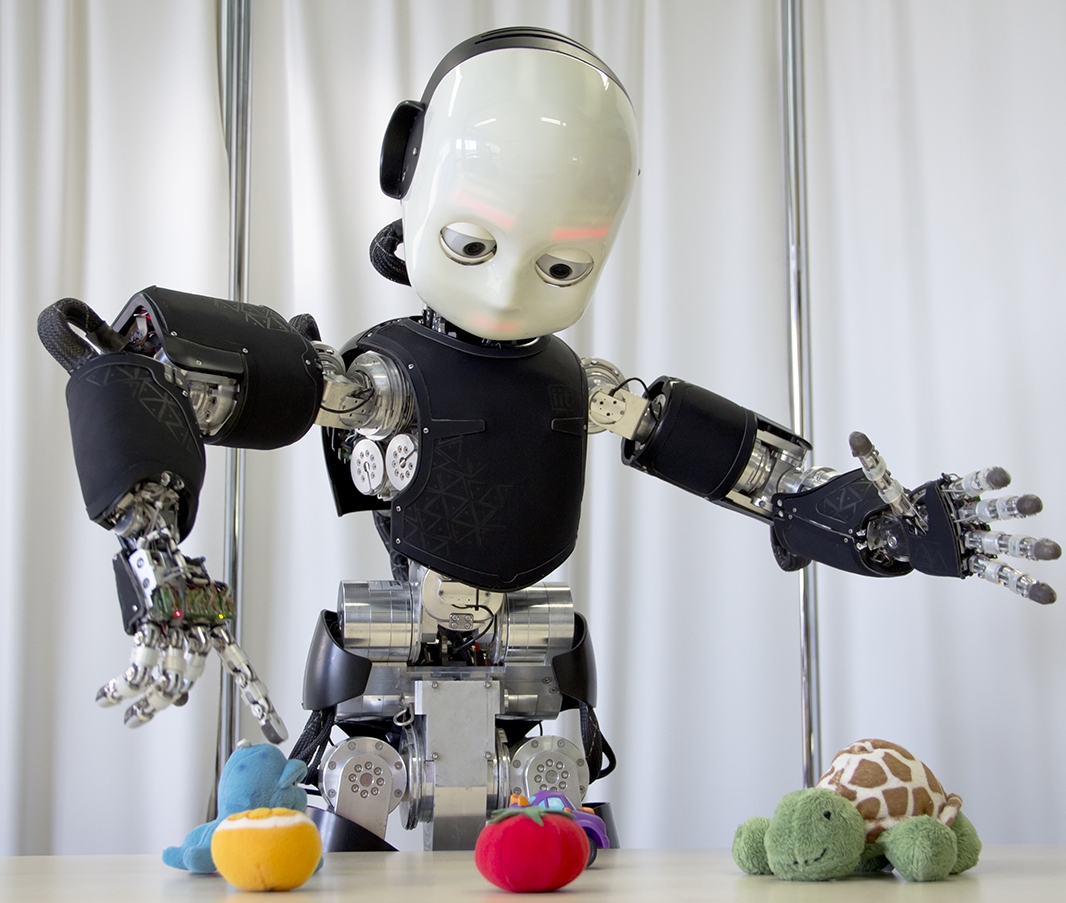

Humanoid robots

Photo credits: Duilio Farina, Italian Institute of Technology. Photo credits: Duilio Farina, Italian Institute of Technology. |

Photo credits: Laura Taverna, Italian Institute of Technology. Photo credits: Laura Taverna, Italian Institute of Technology. |

iCub

A humanoid robot from the Italian Institute of Technology with body proportions of a 4-year old child, 53 degrees of freedom, whole-body sensitive skin, and much more… See http://icub.org/ or iCub Youtube channel.

Pepper

Pepper is a humanoid robot from Softbank Robotics (formerly Aldebaran). The robot is meant for human-robot interaction research and social robotics. You can meet this robot also in various places like the Prague Airport.

Nao

Two Nao humanoid robots from Softbank Robotics (formerly Aldebaran). The robots are used for developmental robotics as well as human-robot interaction research.

- Nao blue. Version Evolution (V5). Specially equipped with artificial sensitive skin (like the iCub) on torso, face, and hands.

- Nao red. Version 3+.

|

|

Collaborative manipulator arms

KUKA LBR iiwa + Barrett Hand

A 7 DoF collaborative robot (KUKA LBR iiwa R800) with joint torque sensing in every axis and a 3-finger Barrett Hand (BH8-282) with 96 tactile sensors and joint torque sensing in every fingertip.

UR10e + Airskin + OnRobot gripper / QB Soft Hand

A 6 DoF Universal Robots UR10e manipulator covered with Airskin collision sensor, including the gripper (OnRobot RG6).

Kinova Gen3 arm + Robotiq gripper

An ultralightweight 7 DoF arm with embedded RGB-D camera at the wrist and a Robotiq 2F finger.

An informal list of Open and ongoing projects is available on the webpages of Matej Hoffmann. You may have a look also at the Past projects, some of which have received awards or resulted in publications.

A list of currently open topics with a formal description, supervised by Matej Hoffmann and possibly co-supervised by some of the group members, is available here: EN-LINK / CZ-LINK.

Other topics than listed below can be defined upon request – simply drop by at KN-E211 or write an email to Matej Hoffmann or other group members.