The project is entitled “Learning Universal Visual Representation with Limited Supervision” and will last 5 years (2021-2025).

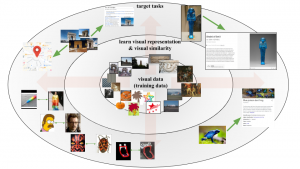

Human perception of visual similarity, i.e. telling whether two objects or scenes are visually similar or not, relies on a number of different factors, such as shape, texture, color, local or global appearance, and motion. Human ability to evaluate visual resemblance is usually not affected by the variability of the surrounding world nor by the domain. Visual recognition performed by machines often relies on the estimation of visual similarity. This project targets to generate models for such estimation that well capture a variety of perceptual factors, are applicable to a number of domains, and are discriminative even for previously unseen objects. To learn such models, large amounts of examples that are annotated by humans are required, but are also difficult to obtain. Therefore, another goal of the project is to automatically extend a small set of annotations over large unlabeled image collections and over domains. Possible applications include visual recognition of particular objects, in the domain of artworks, products, and urban landscapes, and fine-grained categorization, in the domain of animal/plant species and human activities.