Biomedical Imaging Algorithms Research Group

We’re a group researching in the areas of humanoid, cognitive developmental, neuro-, and collaborative robotics. Robots with artificial electronic skins are one of our specialties.

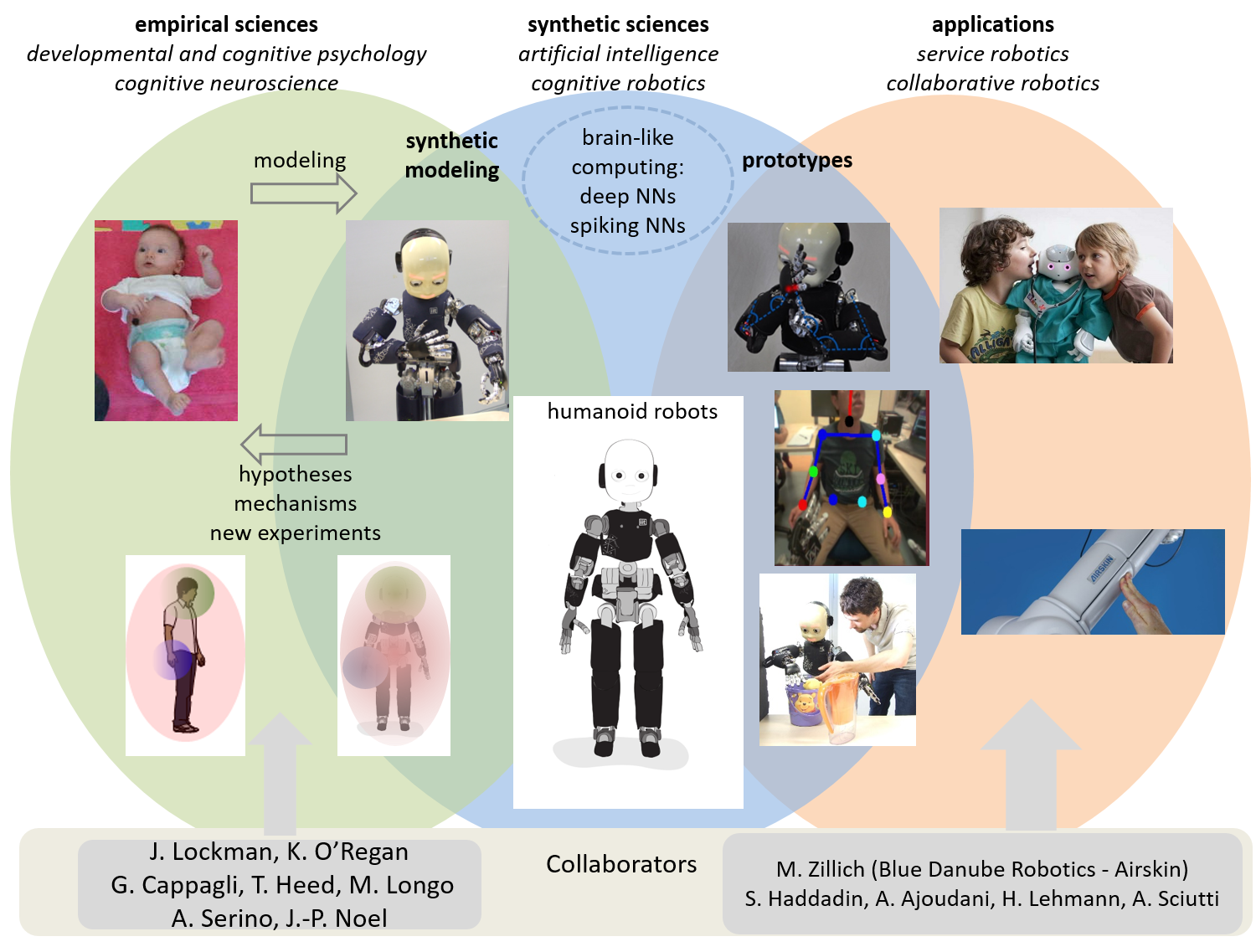

In our research, we employ the so-called synthetic methodology, or “understanding by building”, with two main goals:

- Understanding cognition and its development. In particular, we’re interested in the “body in the brain“: how do babies learn to represent their bodies and the space around it (peripersonal space) and what are the mechanisms in the brain. We build embodied computational models on humanoid robots to uncover how these representations operate.

- Robots safe and natural around humans. Taking inspiration from humans, we make robots exploit multimodal information (mostly vision and touch) to share space with humans. We’re interested in physical and social human-robot interaction.

For more details about our Research see the corresponding tab.

Our full affiliation is Vision for Robotics and Autonomous Systems, Department of Cybernetics, Faculty of Electrical Engineering, Czech Technical University in Prague.

Humanoids group

|

|

|

| Matej Hoffmann (Assistant Professor, coordinator) Google Scholar profile |

Tomas Svoboda (Associate Professor) Google Scholar profile |

Karla Stepanova (Postdoc) Google Scholar profile |

| . | ||

|

|

|

| Zdenek Straka (PhD Student) Google Scholar profile |

Petr Svarny (PhD Student) Google Scholar profile |

Filipe Gama (PhD Student) |

| . | ||

|

||

| Shubhan Patni (PhD Student) |

More information can also be found here.

Models of body representations

How do babies learn about their bodies? Newborns probably do not have a holistic perception of their body; instead they are starting to pick up correlations in the streams of individual sensory modalities (in particular visual, tactile, proprioceptive). The structure in these streams allows them to learn the first models of their bodies. The mechanisms behind these processes are largely unclear. In collaboration with developmental and cognitive psychologists, we want to shed more light on this topic by developing robotic models.

Safe physical human-robot interaction

Robots are leaving the factory, entering domains that are far less structured and starting to share living spaces with humans. As a consequence, they need to dynamically adapt to unpredictable interactions with people and guarantee safety at every moment. “Body awareness” acquired through artificial skin can be used not only to improve reactions to collisions, but when coupled with vision, it can be extended to a surface around the body (so-called peripersonal space), facilitating collision avoidance and contact anticipation, eventually leading to safer and more natural interaction of the robot with objects, including humans.

Automatic robot self-calibration

Standard robot calibration procedures require prior knowledge of a number of quantities from the robot’s environment. These conditions have to be present for recalibration to be performed. This has motivated alternative solutions to the self-calibration problem that are more “self-contained” and can be performed automatically by the robot. These typically rely on self-observation of specific points on the robot using the robot’s own camera(s). The advent of robotic skin technologies opens up the possibility of completely new approaches. In particular, the kinematic chain can be closed and the necessary redundant information obtained through self-touch, broadening the sample collection from end-effector to whole body surface. Furthermore, the possibility of truly multimodal calibration – using visual, proprioceptive, tactile, and inertial information – is open.

- whatever…

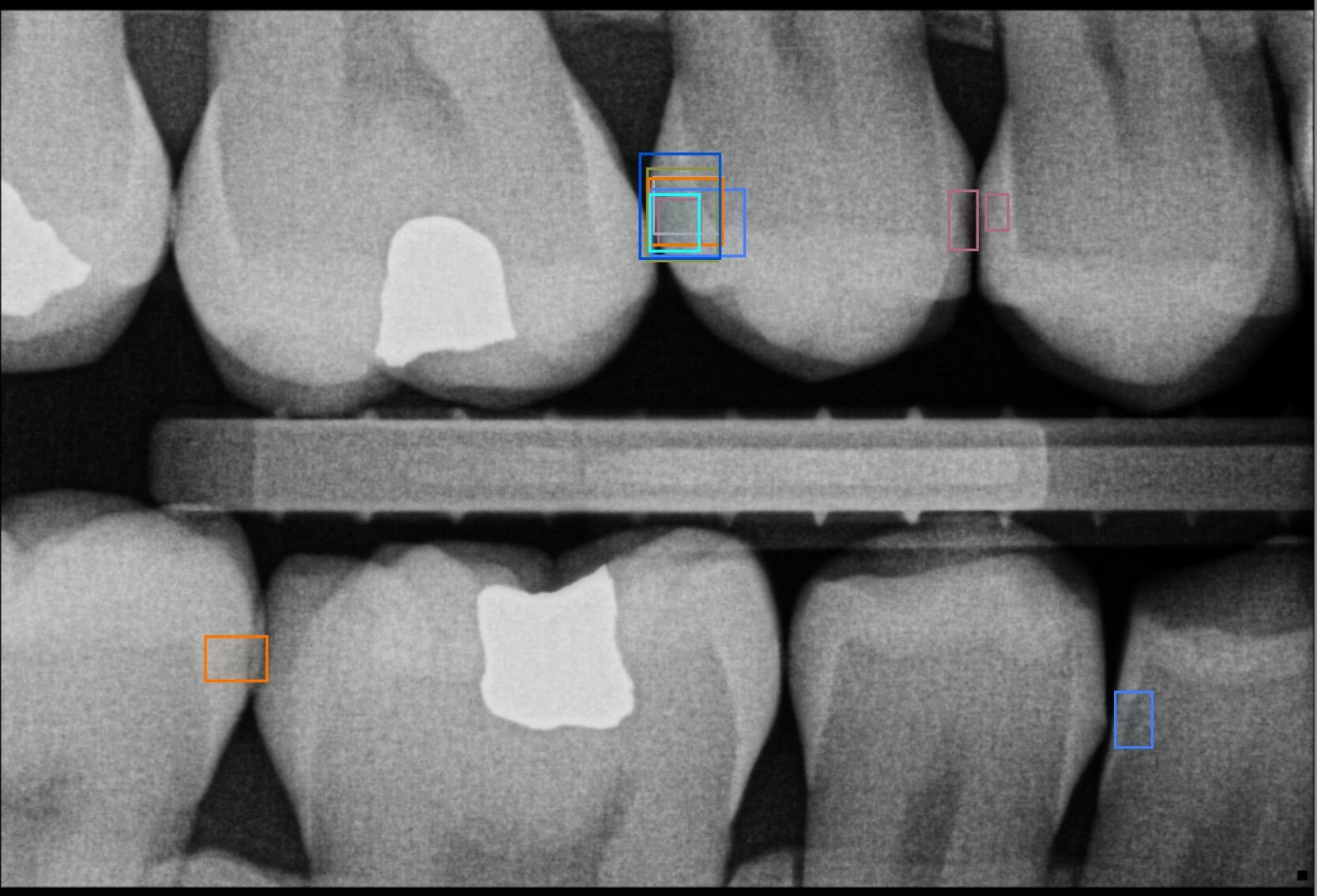

Can we detect tooth decay with the help of AI?